Hi,

I notice in the lightmap-settings.json file there is a lightmap max size parameter. If I overwrite this value, will the engine respect the changes and allow for higher res lightmaps?

Cheers

Michael

Hi,

I notice in the lightmap-settings.json file there is a lightmap max size parameter. If I overwrite this value, will the engine respect the changes and allow for higher res lightmaps?

Cheers

Michael

This file stores lightmap-related settings used for the last bake. These stored settings are only used in the case when post-processing is launched manually for reasons described in this post.

Anyway, the maximum size of the lightmap is a depracated setting in this file, because Shapespark now always bakes lightmaps of at most 4k x 4k size. Using larger size would cause a risk the scene would not work, because according to WebGL Stats ~30% of devices still does not support larger texture sizes.

@wojtek I guess this also counts for all other maps? Like metallic or bump?

That’s a good point. I’m guessing the importer compresses all images higher than that res?

For other maps we use even a lower limit - 2048 to keep the scene download size within reasonable bounds.

The original input maps are automatically downscaled based on their UV mapping. For example, if one repetition of a tiled texture corresponds to 10cm in the model, there is no point in keeping the texture in 2k-resolution, because when the scene is viewed the repetition usually occupies only a small fraction of the screen. So, such a texture can be downscaled to a resolution much less than 2k. The goal here is to balance between high enough resolution and small scene download size.

@wojtek, would it be possible to make the down scaling overrideable on a material by material basis after import? For example I have a 1k towel texture which looks like a mess after auto downscaling.

Currently i’m using a workaround of importing a larger object with the same bitmap applied to it 1:1, resulting in no downscaling, and then hiding the unnecessary object.

It would be better if I could go click the problem object, go to the material tab, then click a checkbox “don’t downscale”, or max downscale % slider.

A global option would be good too, for scenarios where the scene is only a few small objects so doesn’t need any optimisation. For example a product showcase such as a watch or pair of shoes where the user would want to zoom in close.

Do you have the scene with the messy towel texture on our hosting? I would like to take a look at it, because from the description it sounds like it could be some bug.

Currently, we do not have plans for per-material setting of the downscaling factor. For now like you said, you can add a hidden mesh somewhere in the model with such a UV mapping that a small part of the texture covers a large mesh surface. This will ensure that the texture will not be downscaled.

We will consider a global downscaling setting, but for now the above workaround should also help for product showcases: you could duplicate the prodcut in a larger scale and hide it.

Hi Wojtek, i’ve just emailed you the fbx of the towel, the texture, and the way it should look.

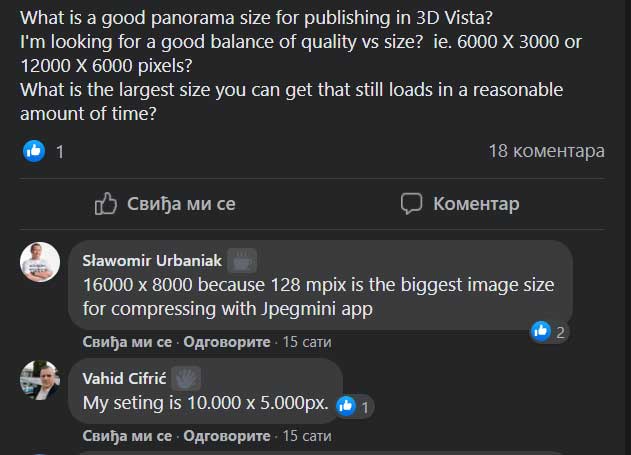

3D Vista uses high-resolution panoramas

However, the program divides these panoramas into smaller parts.

Can this technique be applied in Shapespark for lightmaps?

*update

@Vladan, thanks. Shapespark is already using multiple lightmaps to overcome the maximum texture size limitation. Splitting the lightmaps into even smaller pieces wouldn’t work as well as in the case of panoramas, because lightmaps represent light information spread accross the scene, while panoramas are local - a single panorama presents one concrete part of the scene.